New York Times science writer Emily Anthes details her experience with MeowTalk in a new story, and has more or less come to the same conclusions I did when I wrote about the app last year — it recognizes the obvious, like purring, but adds to confusion over other vocalizations.

Back in January of 2021, in MeowTalk Says Buddy Is A Very Loving Cat, I wrote about using MeowTalk to analyze the vocalizations Bud makes when he wants a door opened. After all, that should be a pretty basic task for an app that exists to translate meows: Cats ask for things, or demand them, some would say.

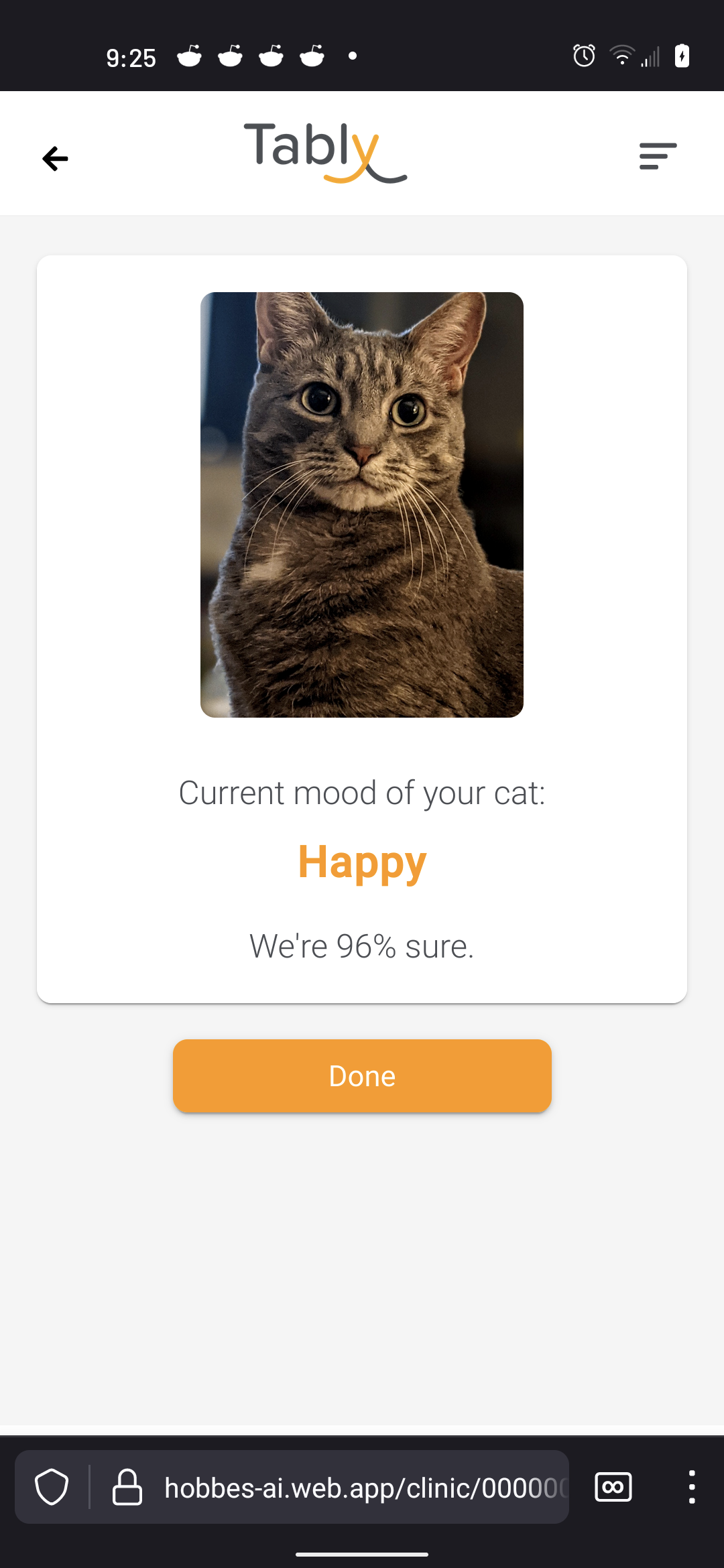

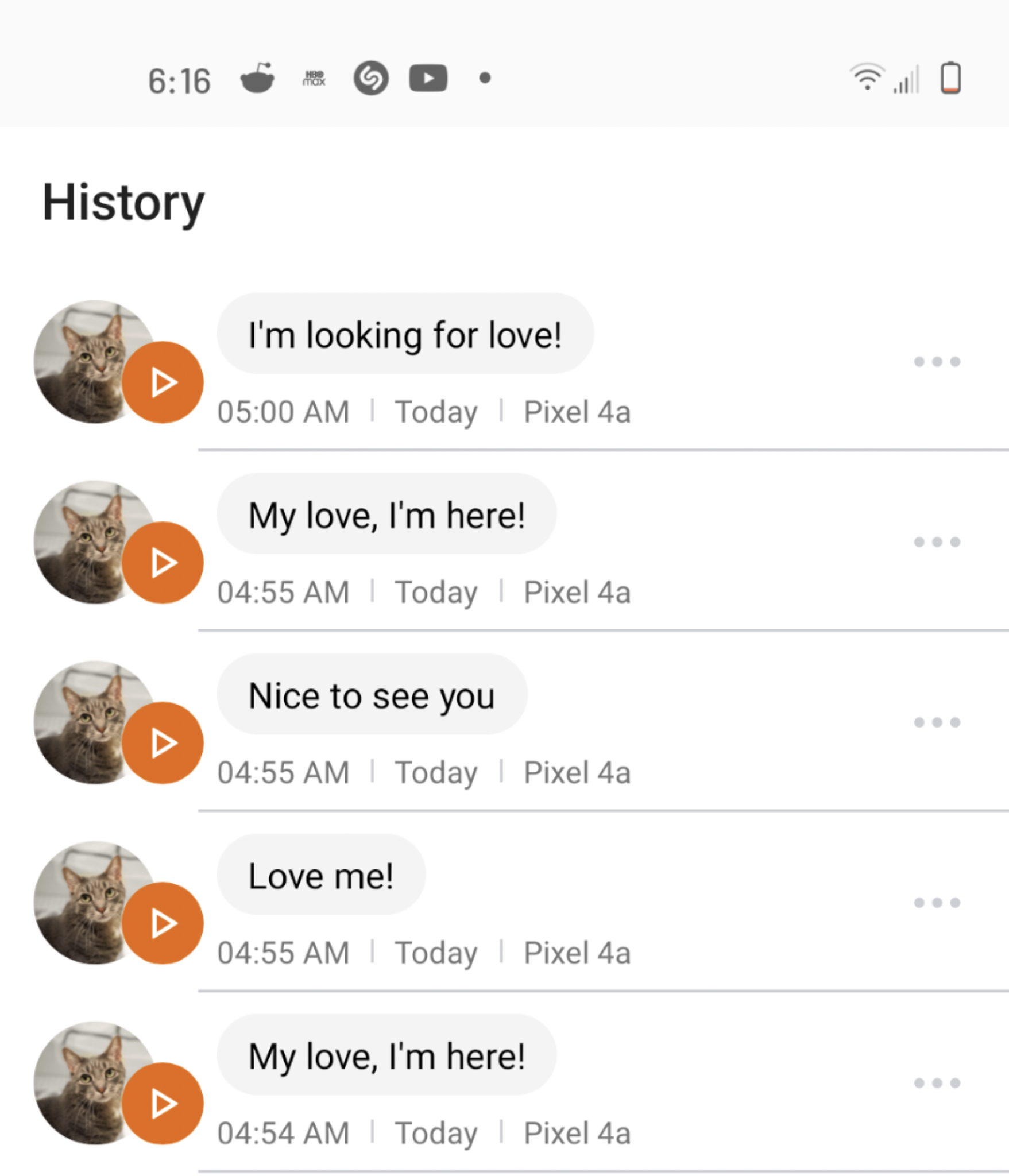

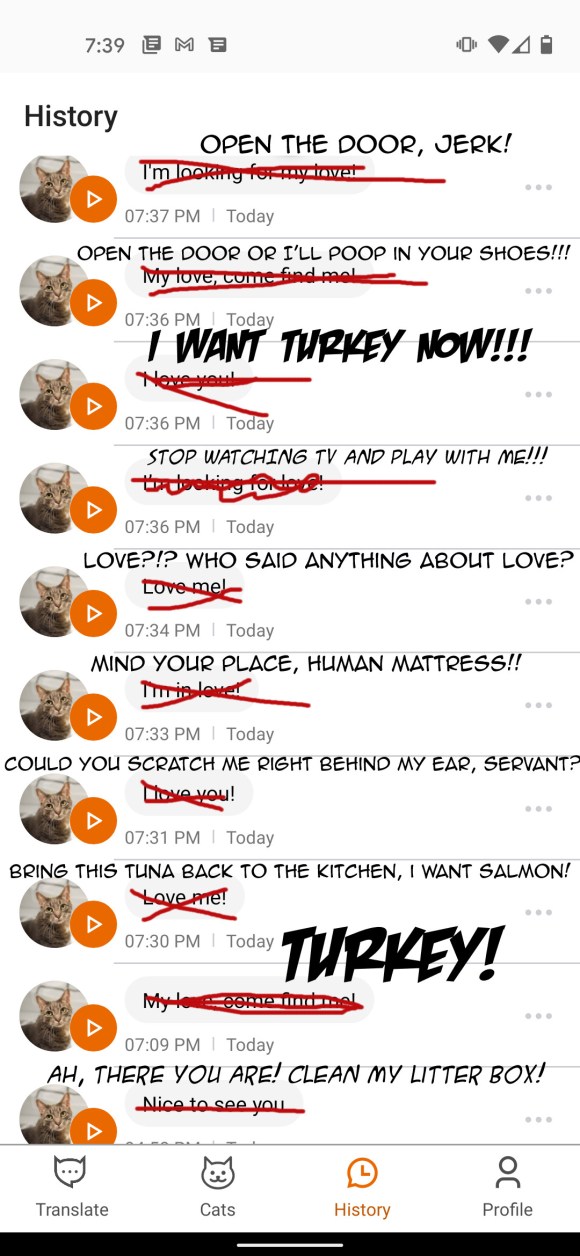

But instead of “Open the door!”, “I want to be near you!”, “Human, I need something!” or even “Obey me, human!”, it told me Bud was serenading me as he pawed and tapped his claws on the door: “I’m looking for love!”, “My love, come find me!”, “I love you!”, “Love me!”, “I’m in love!”

According to MeowTalk, my cat was apparently the author of that scene in Say Anything when John Cusack held up a boombox outside of Ione Skye’s bedroom window.

Anthes had a similar experience:

“At times, I found MeowTalk’s grab bag of conversational translations disturbing. At one point, Momo sounded like a college acquaintance responding to a text: ‘Just chillin’!’ In another, he became a Victorian heroine: ‘My love, I’m here!’ (This encouraged my fiancé to start addressing the cat as ‘my love,’ which was also unsettling.) One afternoon, I picked Momo up off the ground, and when she meowed, I looked at my phone, ‘Hey honey, let’s go somewhere private.’ !”

On the opposite side of the emotional spectrum, MeowTalk took Buddy’s conversation with me about a climbing spot for an argument that nearly came to blows.

“Something made me upset!” Buddy was saying, per MeowTalk. “I’m angry! Give me time to calm down! I am very upset! I am going to fight! It’s on now! Bring it!”

In reality the little dude wanted to jump on the TV stand. Because he’s a serial swiper who loves his gravity experiments, the TV stand is one of like three places he knows he shouldn’t go, which is exactly why he wants to go there. He’s got free rein literally everywhere else.

If MeowTalk had translated “But I really want to!” or something more vague, like “Come on!” or “Please?”, that would be a good indication it’s working as intended. The app should be able to distinguish between pleading, or even arguing, and the kind of freaked-out, hair-on-edge, arched-back kind of vocalizations a cat makes when it’s ready to throw down.

Still, I was optimistic. Here’s what I wrote about MeowTalk last January:

“In some respects it reminds me of Waze, the irreplaceable map and real-time route app famous for saving time and eliminating frustration. I was one of the first to download the app when it launched and found it useless, but when I tried it again a few months later, it steered me past traffic jams and got me to my destination with no fuss.

What was the difference? Few people were using it in those first few days, but as the user base expanded, so did its usefulness.

Like Waze, MeowTalk’s value is in its users, and the data it collects from us. The more cats it hears, the better it’ll become at translating them. If enough of us give it an honest shot, it just may turn out to be the feline equivalent of a universal translator.”

There are also indications we may be looking at things — or hearing them — the “wrong” way. Anthes spoke to Susanne Schötz, a phonetician at Lund University in Sweden, who pointed out the inflection of a feline vocalization carries nuances. In other words, it’s not just the particular sound a cat makes, it’s the way that sound varies tonally.

“They tend to use different kinds of melodies in their meows when they’re trying to point to different things,” said Schötz, who is co-author of an upcoming study on cat vocalizations.

After a few months in which I forgot about MeowTalk, I was dismayed to open the app to find ads wedged between translation attempts, and prompts that asked me to buy the full version to enable MeowTalk to translate certain things.

The developers need to generate revenue, so I don’t begrudge them that. But I think it’s counterproductive to put features behind paywalls when an application like this depends so heavily on people using it and feeding it data.

To use the Waze analogy again, would the app have become popular if it remained the way it was in those first few days after it launched? At the time, I was lucky to see indications that more than a handful of people were using it, even in the New York City area. The app told me nothing useful about real-time traffic conditions.

These days it’s astounding how much real-time traffic information the app receives and processes, routing drivers handily around traffic jams, construction sites and other conditions that might add minutes or even hours to some trips. You can be sure that when you hear a chime and Waze wants to redirect you, other Waze users are transmitting data about a crash or other traffic impediment in your path.

MeowTalk needs more data to be successful, especially since — unlike Waze — it depends on data-hungry machine learning algorithms to parse the sounds it records. Like people, machine learning algorithms have to “practice” to get better, and for a machine, “practice” means hearing hundreds of thousands or millions of meows, chirps, trills, yowls, hisses and purrs from as many cats as possible.

That’s why I’m still optimistic. Machine learning has produced algorithms that can identify human faces and even invent them. It’s produced software that can write prose, navigate roads, translate the text of dead languages and even rule out theories about enduring mysteries like the Voynich Manuscript.

In each of those cases there were innovators, but raw data was at the heart of what they accomplished. If MeowTalk or another company can find a way to feed its algorithms enough data, we may yet figure our furry little friends out — or at least know what they want for dinner.